I use visual interfaces every day without much issue. I'm near-sighted and have astigmatism and wear glasses to correct both issues, but I'm not in a place where I need to use audio interfaces like screenreaders, voice assistants, or audio desktops. So why am I using audio interfaces?

The basics

Once, on a plane, I looked across the aisle and saw everyone sticking wires in their head and plugging into the terminal in front of them.

In real life, most cyberdecks have a display, since that's what most makers are familiar with. But in sci-fi, it's super common to see hackers plugging wires into their heads. Some makers have gotten an HMD to work, and that's rad, but it's not the same as jacking in to a neural interface.

My fellow passengers were using earbuds to connect to the terminal in the back of the seat in front of them. This surreal image got me thinking about a headless cyberdeck that could connect to a display but which was intended to be used with audio interfaces.

Cyberdeck feasibility

Power is by far the hardest part to get right, and displays eat up a battery. Doing away with the display is a potential solution.

Open source contribution to accessibility projects

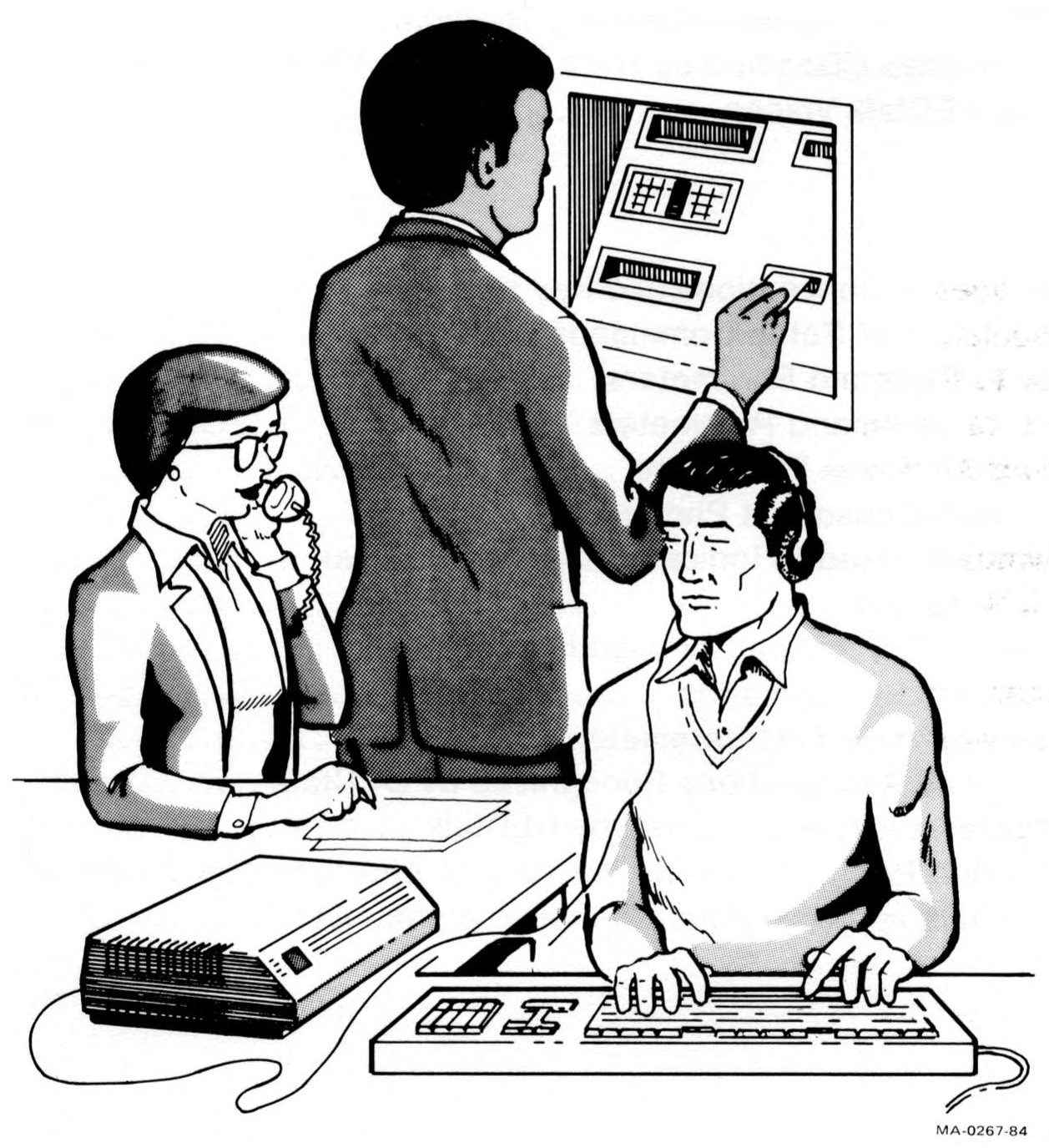

DECtalk, one of the oldest TTS services, was a headless terminal that looked very much like cyberdecks of the fiction when it came out in 1984. Unfortunately, it was also a very expensive, cloud-based service. Sound familiar? Tools for visually impaired users are few and far between, and the tools that do exist are often expensive, cloud-based, and messy. And they're some of the only options that exist.

Open source projects can thrive when hobbyists jump in for the fun of it.